How to Use Many Key Management Service Keys Inside One S3 Bucket

Recently I had a chance to test it. Yet, this simple task gave me a little surprise. Here I’d like to share how you can do it, and what can surprise you too.

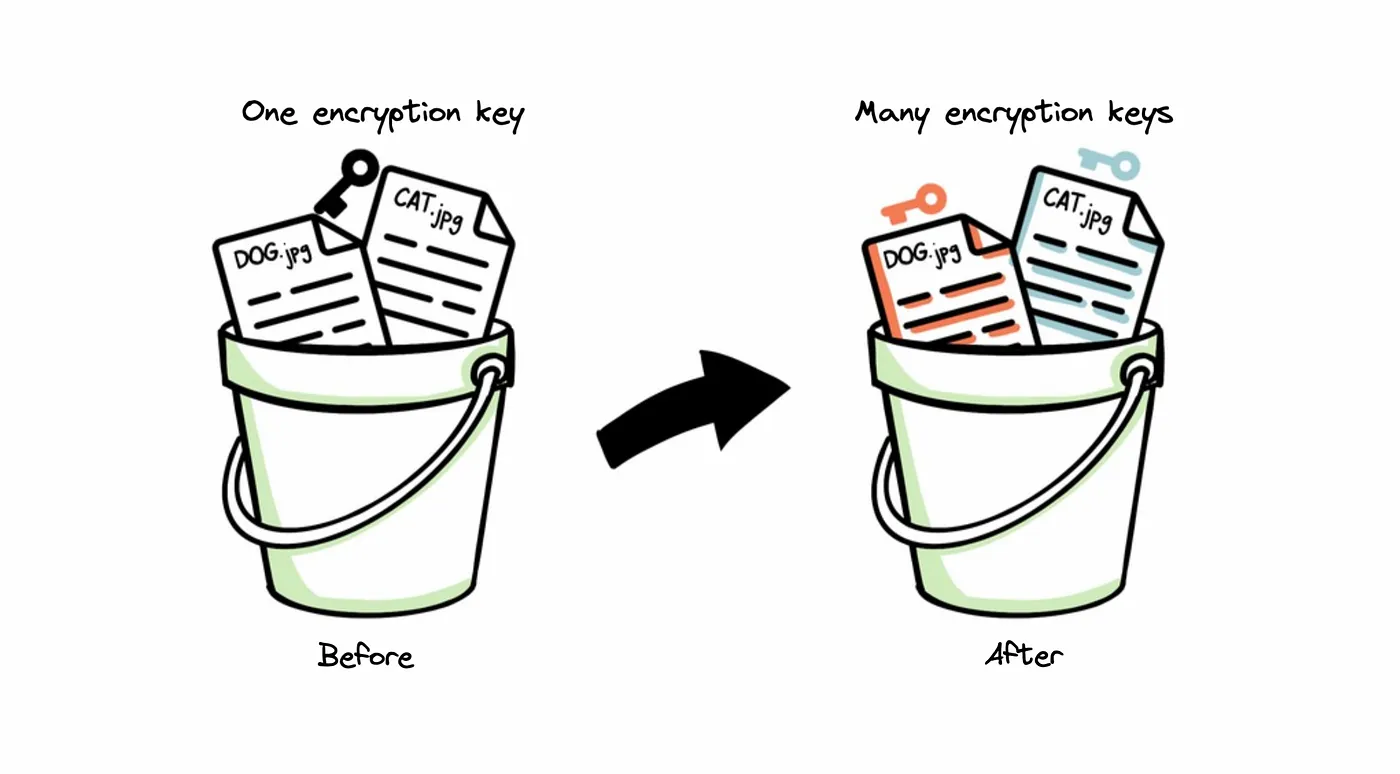

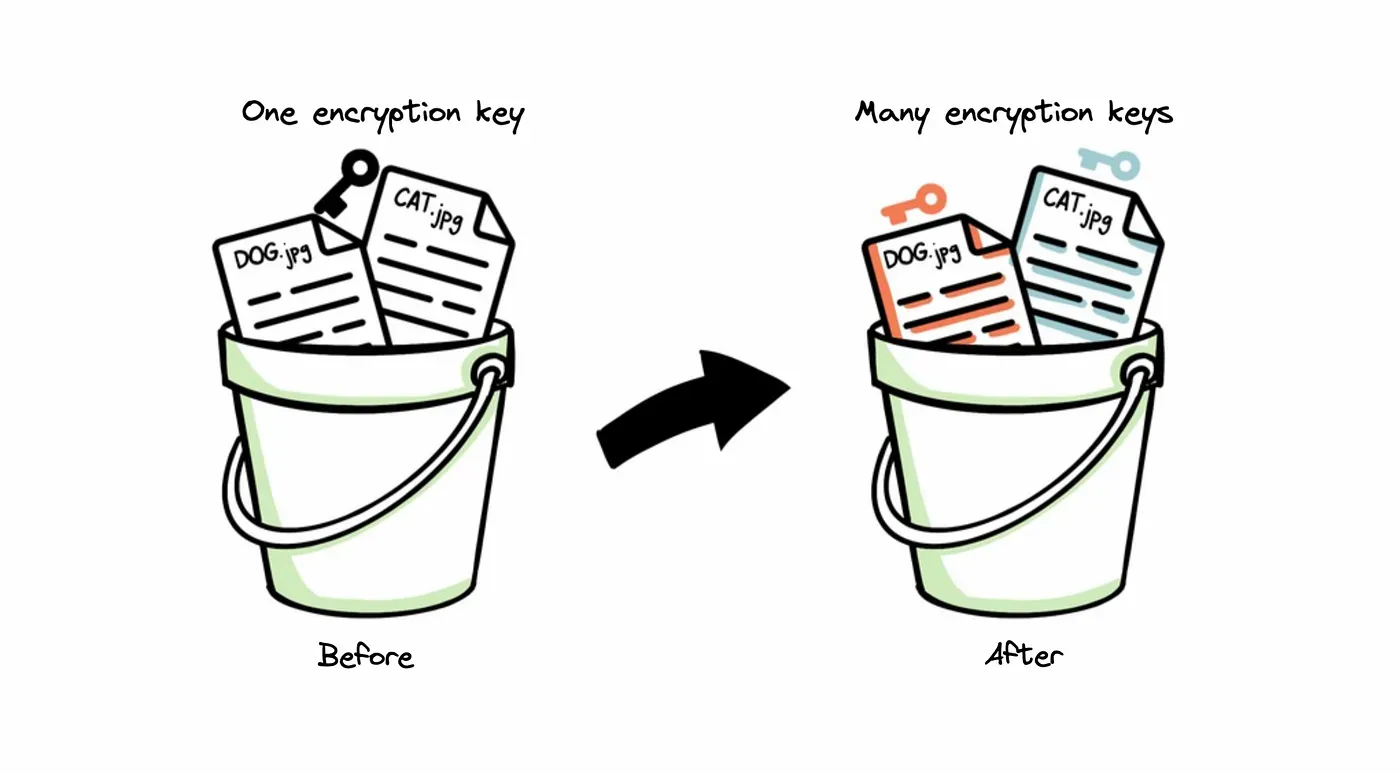

In our example, we have one bucket where photos of our pets are stored. All the files are encrypted by one shared encryption key. Even though it’s a fine solution for us, we want to improve it a little bit. We need to introduce a unique key for each pet type. We love pets, and we know that in the future we want to have them more and more. Due to this, our solution should also be extendable. We don’t want to be concerned about changing the implementation once we get a new type of pet.

What is a KMS?

The KMS stands for “Key Management Service”. It’s a managed service responsible for storing and creating encryption keys. These keys can be used by AWS Services and our application to encrypt the data. In our case, we are using the KMS key for encrypting data in S3 buckets. If you would like to learn more about it, take a look at this link.

Creating a multi-region key with an alias

Creating a key

Let’s start with the easiest thing. To use new encryption keys we need to first create them. Let’s make some assumptions:

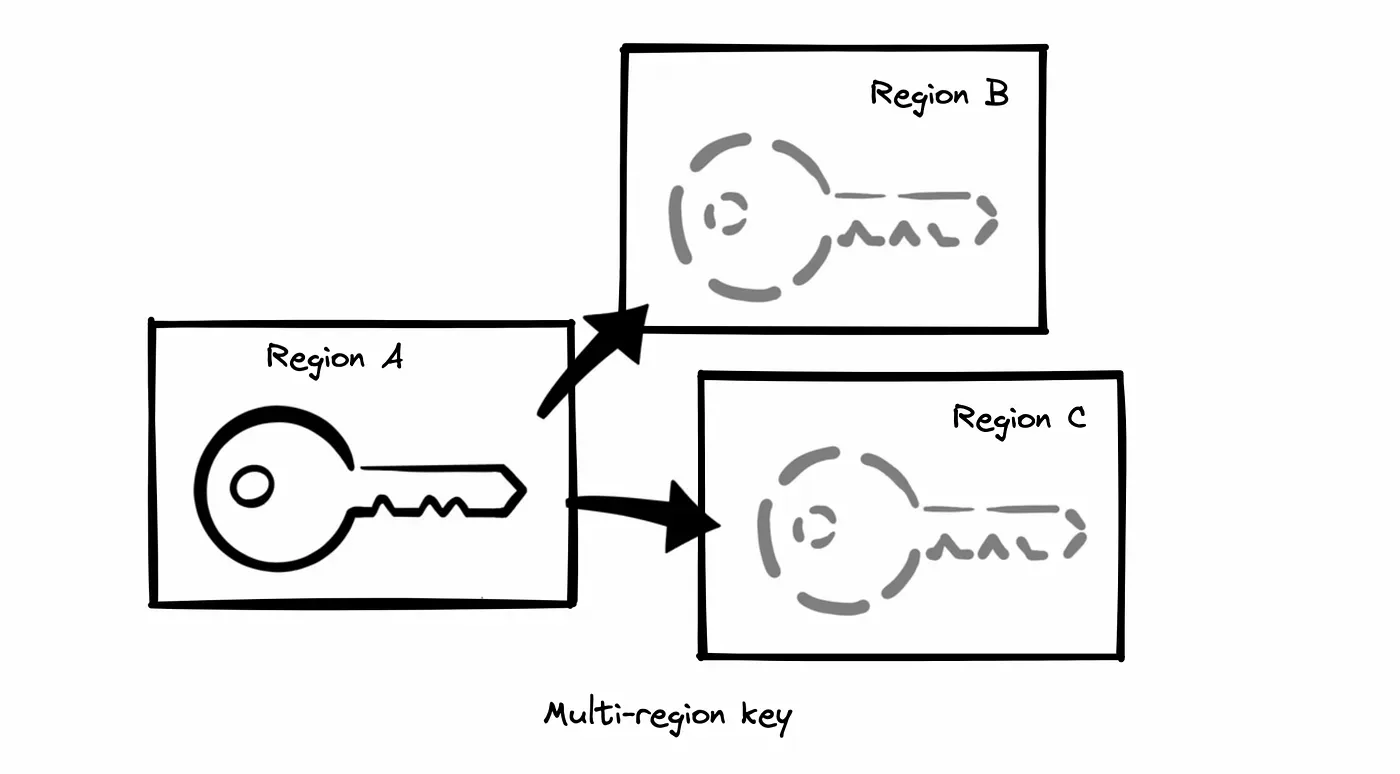

- Our keys will be used in many regions.

- The default policy attached to the KMS keys is not appropriate for us. We need to set up a custom one.

With

aws-sdkwe can create it using a single method like below:

import KMS from 'aws-sdk/clients/kms';

const kmsClient = new KMS({region: 'us-east-1'});

const policy = `{

"Id": "default_kms_policy",

"Version": "2012-10-17",

"Statement": [...]

`;

const tags = [{TagKey: 'KeyType', TagValue: 'PetKmsKey'}];

async function createKey() {

const {KeyMetadata} = await kmsClient

.createKey({

MultiRegion: true,

Tags: tags,

Policy: policy,

BypassPolicyLockoutSafetyCheck: true,

})

.promise();

return KeyMetadata.KeyId;

}Let’s take a look at what we have passed here:

MultiRegion: true— only during creation, we are able to set the key as multi-region. If we don’t do that now, we will need to create a new key.Tags— It’s a good practice to tag our resources. It will allow you to find them later easily. Also, we can use them to grant access to the resource with a specific tag.Policy— AWS adds a default policy if we don’t specify ours. Because we want to have a little broader policy, we need to define it by ourselves.BypassPolicyLockoutSafetyCheck: true— A flag to indicate whether to bypass the key policy lockout safety check. If you don’t set it to true, the key policy must allow the principal that is making the CreateKey request to make a subsequent PutKeyPolicy request on the KMS key. This reduces the risk that the KMS key becomes unmanageable.

Adding an alias

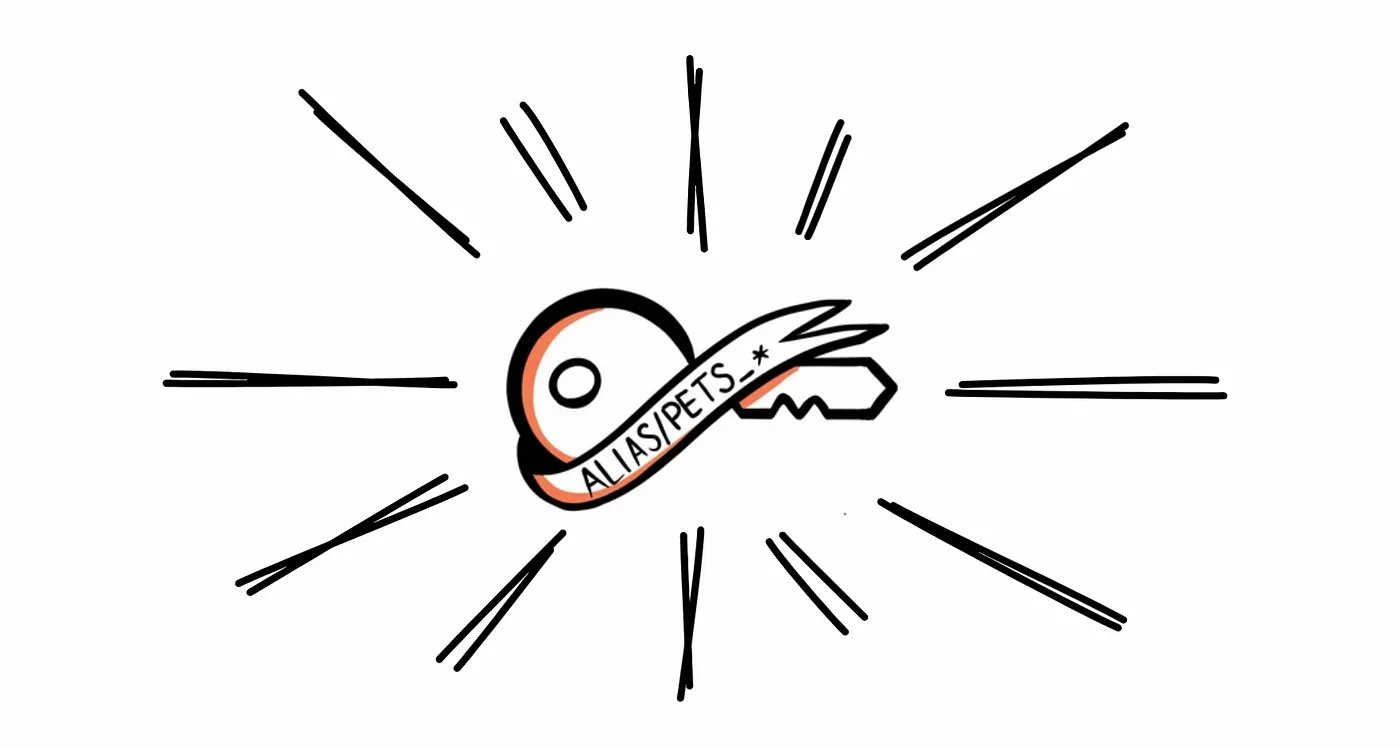

Right now we have our new, shiny key. But using the default KeyId is boring. It’s much better when we know what is the purpose of this key. Is it dedicated to one something specific? Are we using it to encrypt photos of our pets? With KeyId we are not sure why this key has been created. Also using the aws-sdk can be much easier. All you have to do is use the aliases.

In our example, keys are used to encrypt pet photos. We need to know which key should be used for encryption and decryption, depending on the pet type. This time we will take the KeyId returned from our createKey method. Together with the alias name, we will pass it to the below function:

import KMS from 'aws-sdk/clients/kms';

const kmsClient = new KMS({region: 'us-east-1'});

async function createAlias(keyArn: string, alias: string) {

await kmsClient.createAlias({

TargetKeyId: keyArn,

AliasName: alias,

})

.promise();

}And that’s all. Right now our key has an associated alias. Below is a short summary of a list of parameters passed to the function:

- TargetKeyId — it is

KeyIdreturned from thecreateKeymethod. - AliasName — it’s a value of our alias, it is important to add a

alias/prefix. In our case, we usedalias/pets_dog`` andalias/pets_cat` for the second key.

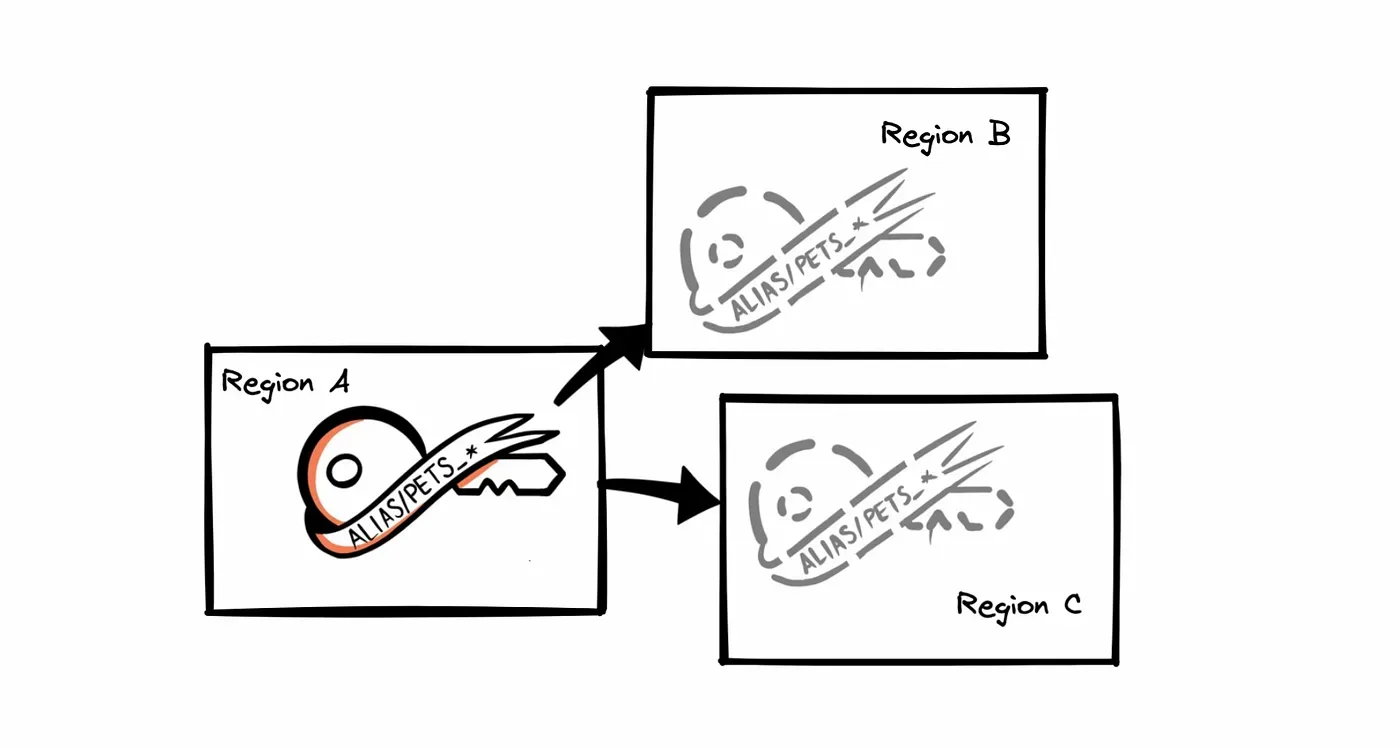

Creating key and alias replicas in another region

The key and the alias are in place, can we start encrypting some data? Not yet. We have already created everything we need but only in a single region. Resources are not shared between regions. We need to create replicas in desired regions.

Key and alias synchronization between regions doesn’t exist.

It’s worth mentioning here that we need to make sure they stay synchronized. E.g. when we create a key with a custom policy, we need to pass this policy to the replicated key. If we change something in one key, we need to update all the replicated keys by hand. The same applies to aliases. They are unique per region, so we can create the same alias in a different one. Nevertheless, they are also not in sync. Every change of an alias must be applied to all the regions where our replicas live.

Creating replicas

The last interesting thing is the region provided to our KMS client. If we want to create replicas in a different region, our KMS client must be created for this specific region. AWS client won’t allow you to make a cross-region request. Take a look at the example:

import KMS from 'aws-sdk/clients/kms';

async function createReplica(keyId: string, region: string, alias: string) {

const kmsClient = new KMS({region});

const policy = `{

"Id": "default_kms_policy",

"Version": "2012-10-17",

"Statement": [...]

`;

const tags = [{TagKey: 'KeyType', TagValue: 'PetKmsKey'}];

const {ReplicaKeyMetadata} = await kmsClient

.replicateKey({

KeyId: keyId,

ReplicaRegion: region,

Tags: tags,

Policy: policy,

BypassPolicyLockoutSafetyCheck: true,

})

.promise();

await kmsClient

.createAlias({

TargetKeyId: ReplicaKeyMetadata!.KeyId!,

AliasName: alias,

})

.promise();

}Because everything is in place, let’s take a look at how to grant access to our encrypted S3 resources to lambdas.

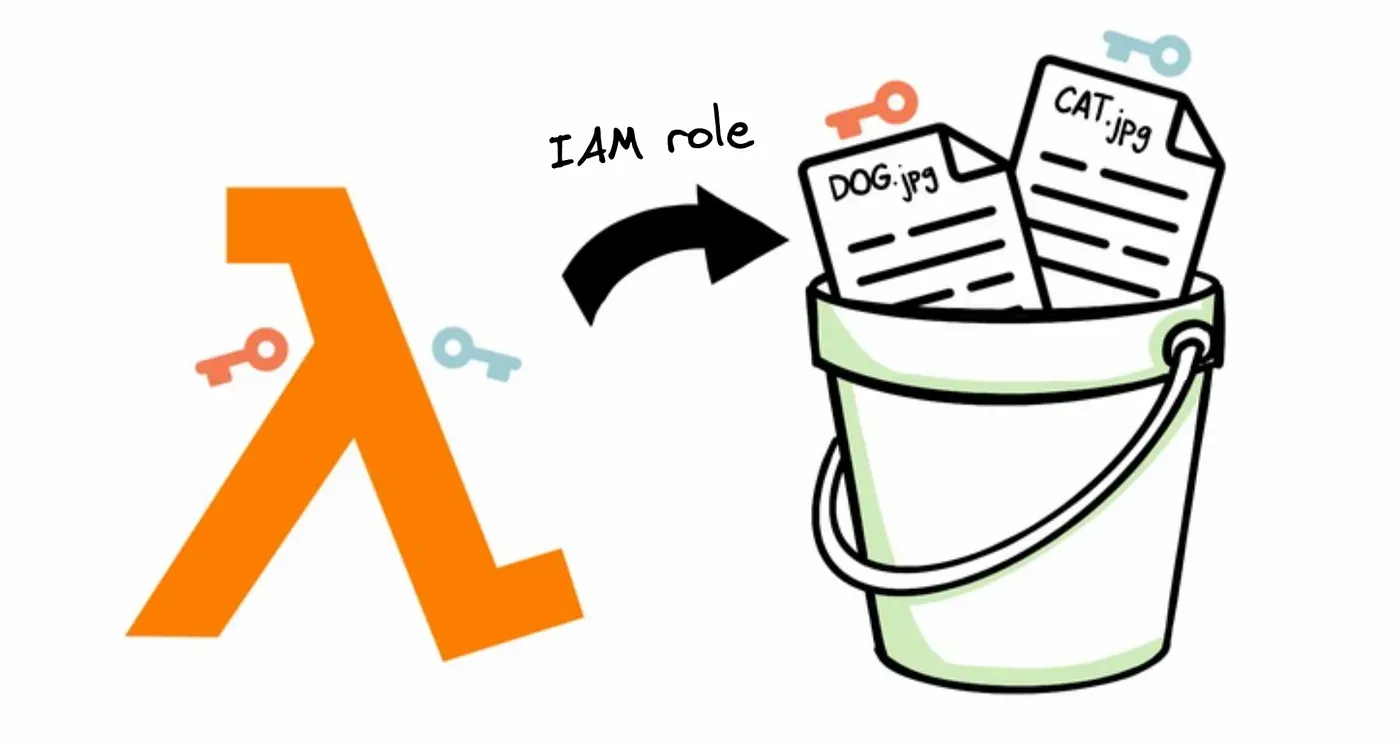

Access to the encrypted S3 files from lambda

We treat security severely, that’s why we don’t want to give too broad access to our keys. Nonetheless, we also don’t want to change our policy every time we add a new key. Right now we have two keys. The first is dedicated to our dog pets_dogand the second is to the cat pets_cat. Let’s take a look at what we can do with them.

Alias-based policy

The first idea I had was to grant access based on the alias value. Our aliases have the same prefix so I thought we could use it to add a condition to the policy.

- Effect: Allow

Action:

- 'kms:CreateGrant'

- 'kms:Decrypt'

- 'kms:GenerateDataKey'

- 'kms:RequestAlias'

Resource:

- '*'

Condition:

StringLike:

kms:RequestAlias:

- "alias/pets_*"Unfortunately, it won’t work. S3 uses a key ARN to access KMS, so this policy won’t work with e.g.GetObject requests. In the request there is no alias, that’s why this condition cannot be used.

Resource tag-based policy

The second approach which I currently use is a tag-based policy. In our createKey and replicateKey methods we applied the tags to our keys. Each pet encryption key has a KeyType:PetKmsKey tag. As long as only our pet’s key will have this tag, we can easily grant access based on this value

Action:

- 'kms:CreateGrant'

- 'kms:Decrypt'

- 'kms:GenerateDataKey'

- 'kms:RequestAlias'

Resource:

- '*'

Condition:

StringEquals:

aws:ResourceTag/KeyType: "PetKmsKey"Each lambda with this policy will have an access to all keys with the PetKmsKey KeyType tag. If we have a dedicated function responsible for creating a thumbnail for each pet photo, this policy will allow our lambda to get the file and do the job. Even if we add more keys for other pets, we won’t have to make any changes here.

Remember, actions defined in this policy depend on what you would like to do in your function. If you are uploading a file you will need to add kms:Encrypt action. Some of them might be useless for your use case. Review what you need and leave only necessary actions.

Specifying each key in a policy

There is a second option that we can consider. Before we attached a custom policy to our key. If we know which lambdas need access to the key, we can list them in the key policy. The only con about this approach is the maintenance of the policy. If the new lambda needs access to the encryption keys, we will need to update all policies. It will require much more work.

- Effect: Allow

Action:

- kms:CreateGrant

- kms:Decrypt

- kms:Encrypt

- kms:GenerateDataKey

Resource:

- arn:aws:kms:*:*:key/<dog_key_id>

- arn:aws:kms:*:*:key/<cat_key_id>Replicating the bucket to the secondary region

Policy for our buckets

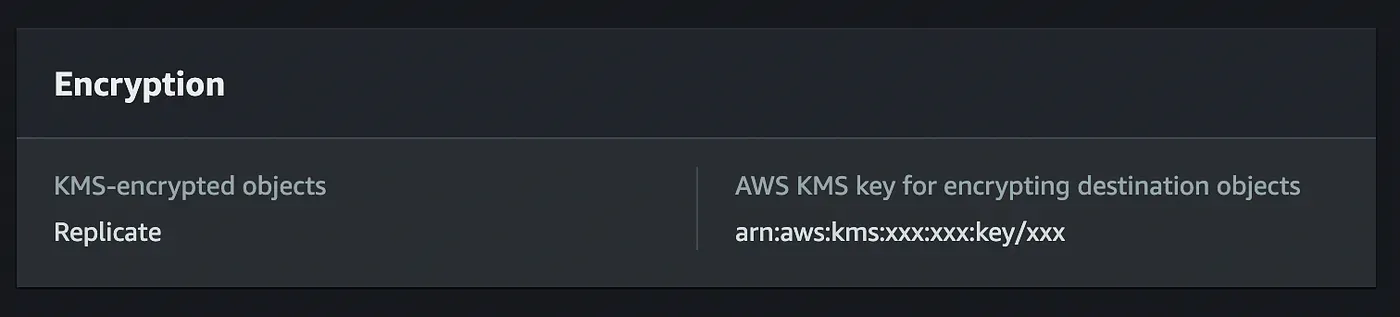

Our data live in two or more regions. To replicate them we can use S3 replication. But encrypting them with different keys can cause a problem. If we don’t have too broad policies attached to S3 our replication should stop working.

It’s because we forgot to grant access to the new keys in our S3 bucket policy. To fix it we can copy the policy from our lambdas and adjust it for our needs. This time we need only kms:Encrypt action.

- Effect: Allow

Action:

- 'kms:Encrypt'

Resource:

- '*'

Condition:

StringEquals:

aws:ResourceTag/KeyType: "PetKmsKey"Problem with automatically encrypting replicated data

If you take a look at your Replication Rules in your S3 bucket Management tab, you can notice a section Encryption . There is a defined key that will be used to encrypt all the data from our primary bucket in the secondary one.

Using many keys with replication requires more work from us. Our data won’t be encrypted with the same key automatically, we need to add some more mechanisms to automate it. E.g. we can add an event notification, which will trigger a lambda. This lambda will take the file, check which key has been used in the primary region to encrypt it, and re-encrypt the file with the correct key.

Summary

I hope this contrived example will help you better understand how KMS works. Let’s summarize the most important parts:

- Control access and data replications are the hardest things in managing many keys.

- We need to remember that our key replicas are not in sync. Every change must be propagated everywhere.

- Remember about reviewing your policies. Take care of the least-privilege policy.

- Data replication in S3 doesn’t work well with many keys. We need to add an additional mechanism to encrypt data in other regions.